In this post, the team working on networking shares a collaborative vision for container networking on Cloud Foundry and wants feedback from developers, architects, operations and network leaders. We will cover the fundamental concept and discuss technical prerequisites, goals and requirements, overlay networks, external resource policies, and service discovery.

This article builds on the container networking scenarios original described in our open-source foundation proposal, and we are looking for feedback from the community. As we’ve worked with customers and began building the software foundations for container networking in the open source Cloud Foundry , we’ve broadened our view of the problem space. This article discusses what we’ve learned and where we think we’re going.

Fundamental Concept

The key entity in Cloud Foundry is the application. As we work with users to build and deploy more cloud-native applications, which often includes microservices, the demand for direct instance-to-instance communication is increasing. Application networking becomes a strategic imperative within Cloud Foundry. If we think of the graph of applications (both within Cloud Foundry and external), we are interested in enabling the “edges”, and “policy” is the set of edges.

Notice that we have not talked about Cloud Foundry orgs and spaces. Orgs and spaces are mechanisms for grouping related resources into a hierarchy and controlling what resources people are able to consume, ensuring proper authentication. In the cloud-native microservice world we are building, applications will communicate with each other without consideration for human authentication boundaries. We believe application networking is an orthogonal concern to orgs and spaces.

Conversely, our proposed design doesn’t prevent a Cloud Foundry operations team from composing groups of applications along their human-centric boundaries. For example, an operator can achieve “space networking” by enumerating all the applications associated with a space and granting them mutual permissions.

Technical Prerequisites

We know from experience and customer feedback that we cannot assume most network technologies will play nicely with opaque, NATed instances. We take the end-to-end principle as a fundamental tenet, and the competitive landscape also reflects this assertion. To achieve broad integration, we need a unique IP address per application instance, and we need a single network interface per container. This has the effect of decoupling the host networking from the application networking, making the management of both simpler.

Providing each application instance with a unique IP address provides direct addressability. This means that traffic can be correlated to that specific instance and filtered by application.

Why a single network interface? We add significant complexity if we don’t make instance IPs unique and choose to add network interfaces. When we do, developers have to ensure they are binding to the right interface, communicating on the right interface (depending on what they are trying to reach), and routing correctly within the IP stack. Additionally, service discovery must decide what IP to return for a service based on who is asking, and on and on. It’s messy and does not enable the simple experience we want for developers.

Overlay Networks – A Potential Solution

Users (that is, developers and operations teams deploying on Cloud Foundry) want application isolation and easy-to-use connectivity—making their lives easier is our fundamental value proposition. Again and again, we hear architects and network admins asking for transparency in what’s actually on the wire. They need the ability to identify, classify and authorize packets inside (and egressing) Cloud Foundry networks, and they need to be able to discern those packets by Cloud Foundry application.

Increasingly, overlay networks are the preferred way to connect cloud-based applications in a (more or less) transparent manner. Our proposal for applying application identity to network traffic has its roots in VXLAN overlay networks. But, we repurpose some of its internals to attach metadata without modifying any userland applications. VXLAN benefits from standard tooling and widespread implementation. In addition, it is a well-known way to isolate traffic streams. At the same time, we’re not planning to leverage some of the “magic” features like “learning” via flooding and explicit tunneling. This way, both routing and the control plane are under the direction of Cloud Foundry.

VXLAN’s encapsulation scheme includes a Virtual Network Identifier (“VNI”) meant to distinguish virtual overlay networks. Given our need to tag packets to applications, we can build an overlay that uses VNI as application identifier. All application instances from the same application would be assigned a unique VNI (“app ID”). Then, topology can be simply described as tuples of identifiers (“App ID 123 is permitted to reach App ID 789”), and ingress and egress points can filter based on application identifier. In conversation, that might mean, “I permit access to the data service (789) from the reporting application (aka 123).” Of course, this can be extended to include protocols, ports, and other relevant fields. The terminology stays at the correct level of abstraction, without devolving into tracking volatile attributes like IP address and port. (We’re also looking at several existing proposals, such as the VXLAN Group Policy Option, and we’re open to other suggestions.)

We have some open questions, and we would like to hear from you in the comments below, or join us on the Cloud Foundry #container-networking Slack channel.

- If we use an overlay network, can we number containers from a consistent pool? (I.E., can we assume a sufficiently-large contiguous block of addresses, such as from RFC1918 space?)

- Do you need overlapping IP addresses or IP address reuse? (For example, are you offering “BYO address space” to customers, and need a way to “virtualize” the reuse of RFC1918 space?) If you operate a multi-tenant Cloud Foundry, would you need IP address reuse, such as RFC1918 space being reused for each Cloud Foundry tenant?

- Not everyone wants (or needs) to run an overlay network in their infrastructure. We considered IPv6 for overlay and underlay networks. But, customer feedback, adoption friction, and complexities in the real-world have led us to believe IPv6 is sub-optimal. If you disagree, we’re open to the feedback.

What Developers and Operations Want

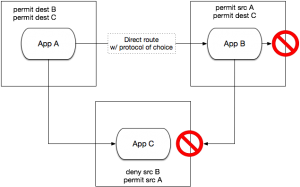

We think of three distinct network communications scenarios for Cloud Foundry users.

1. Container to container

Container-to-container networking was the focus of the original networking proposal to the community, and it is a key enabler for microservices. Cloud Foundry will manage the inter-application traffic, tag all traffic with an application identifier, and instance traffic will be filtered on ingress and egress as defined by policy.

2. Container to BOSH-deployed service

This includes services that are directly integrated with Cloud Foundry, usually using “service brokers.” For example, the platform offers MySQL and RabbitMQ as services. Cloud Foundry could apply the same tagging and provide the same filtering for these services. This feature could be added in a future release, and the precise deployment details aren’t currently defined.

3. Container to external service, such as a database

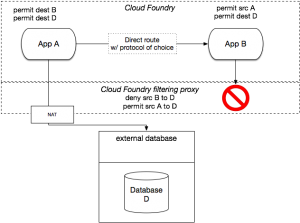

The container-to-container scenario is important to developers, but is different from what network and security administrators really want. The prevalent scenario is an organization with an established database that lives outside Cloud Foundry. As clients in Cloud Foundry make requests for data, how can security administrators filter the traffic from Cloud Foundry by application, using the same policy language and tools? The environment changes continuously and IP/port assignments are too dynamic for the typical firewall update process.

Connecting to External Resources

When clients connect to external resources in this new model, our goal is to make source addressing predictable and to enforce policy on administrator’s behalf. When an application instance originates traffic to an external service, the traffic will be encapsulated and tagged with the appropriate application identifier. The traffic is then directed to a Cloud Foundry network proxy service.

This proxy terminates the connection at the “edge” of the Cloud Foundry perimeter, decapsulates and filters the packets according to policy, and then applies NAT and forwards the traffic on to external services. In this model, security administrators only have to allow the IP addresses from the Cloud Foundry NAT pool to access the external services. Cloud Foundry filters all traffic in both directions as defined by the policy configured by the Cloud Foundry administrator. As a benefit, this design also removes any need for external firewalls to learn the internal details of the Cloud Foundry network. No integration is required.

Suppose you had a shopping cart app, “App A,” that needs to talk to a database, plus some other App B. App B isn’t allowed to talk to the database. Cloud Foundry would enforce this policy on traffic for the application instances and the traffic regressing to the database.

As a side note, this design could conceivably be applied to a network that doesn’t use encapsulation or overlays. If an operations team had sufficiently large, free, contiguous address space to devote to all possible Cloud Foundry hosts and application containers (a daunting proposition for most), it’s feasible that Cloud Foundry could be modified to apply “flat” addressing without overlays, and the policy service could be modified to implement IP-based firewall rules (e.g. iptables) to route and filter traffic. That said, this is an unusually sophisticated case, and not what most customers need from a “batteries included” networking solution.

Again, we’d like your feedback on this design:

- How would you want to define external services in this model? As separate service instances? As simple network endpoints in the policy language? Something?

- Would you want a prebuilt proxy (Linux binary or packaged VM) that you can run “in front of” your external service and filters for you? If we built this, would it be acceptable to “enlighten” Cloud Foundry by assigning your external service (its proxy, really) a pseudo-“application id” in our Policy Service in order to enforce policy?

- If you need to filter outside Cloud Foundry, such as on a firewall, can you configure your firewall to process VXLAN? Some customers can process VXLAN and might want to do their own filtering. This configuration would require integration to discover application identifiers, so how would that work?

- If you use hardware firewalls, do they speak OpenFlow? Would you be interested in a tool for automatically generating OpenFlow rules to enforce policy? How might you use it?

We are looking forward to the feedback!