This article provides an overview on the current state of the popular Cloud Foundry (CF) platform for artificial intelligence (AI) and is based on a talk given by Long Nguyen, a BOSH tinkerer and CF enthusiast. He shared his experience at the latest Cloud Foundry Day in NYC, where he took us through the steps he went through and the challenges faced while setting it up.

People from the Cloud Foundry community see everything through the lens of cloud compute that can be optimized using BOSH, a cloud platform for deploying applications. It is well known that the demand for GPU acceleration is surging. BOSH offers a compelling solution for managing the lifecycle of applications. What if you could combine the robustness of BOSH with the raw processing power of GPUs? Nguyen’s talk delves into the technical nitty-gritty of setting up GPUs on BOSH, exploring the challenges and potential solutions for cloud infrastructure engineers.

Yoking the GPU Power Horse

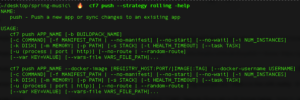

BOSH provides multiple methods to attach GPUs to virtual machines (VMs). Here is a breakdown of the most common approaches:

- Accelerator-Optimized Machine Types: This is the simplest approach. BOSH offers pre-configured VM types with attached GPUs. You select the desired type during VM creation, and the GPU is automatically provisioned. However, this method offers limited flexibility in terms of GPU selection and instance customization.

- Cloud Property Configuration: For more granular control, you can leverage cloud properties during VM creation. This approach allows you to specify the exact GPU type and quantity required. However, it necessitates modifications to the Cloud Foundry Cloud Provider Interface (CPI) to recognize and utilize the attached GPUs. This can involve complex configuration changes and potential compatibility issues with different CPI versions.

Resolving the Driver Dependencies

Once the GPUs are attached, driver installation is the next hurdle. Here is a breakdown of the different strategies available:

- Custom Stemcells with Pre-Installed Drivers: This approach seems convenient at first – custom stemcells preloaded with the necessary GPU drivers are deployed. However, maintaining these custom stemcells across different BOSH releases and driver updates can be a logistical nightmare.

- Expanding Root Disks via CPIs: A more sustainable solution involves leveraging the Cloud Foundry CPIs’ ability to expand the root disk size of VMs. This allows for installing the required drivers directly on the BOSH VM during the boot process. This approach offers greater flexibility and simplifies driver management in the long run.

Cloud Foundry and GPUs: A Match, Still Being Made

Cloud Foundry provides a solid foundation for deploying and managing applications. However, its current limitations might not fully exploit the potential of GPUs.

- Scaling Challenges: Scaling GPU-intensive applications like large language models (LLMs) within Cloud Foundry can be cumbersome. The platform’s current horizontal scaling mechanisms might not be optimized for workloads that heavily rely on GPU resources.

- Multi-Tenancy Hurdles: Cloud Foundry currently lacks robust multi-tenancy support for GPU workloads. This can be a limitation for scenarios where multiple applications require access to the same GPU resources.

Opportunities Within The Community

While Cloud Foundry itself might have limitations for certain GPU workloads, alternative approaches can unlock their potential. Offering LLMs as a service through a dedicated Cloud Foundry service broker presents a promising solution. This approach allows developers to seamlessly integrate LLMs into their Cloud Foundry applications without worrying about the underlying infrastructure complexities. The service broker can handle GPU resource management and ensure proper isolation between different consumers.

Enabling EFI support in stemcells is crucial to unlock the full potential of these powerful GPUs. Currently, BOSH stemcells lack support for Extensible Firmware Interface (EFI). This limitation hinders the use of newer GPUs with larger memory capacities (greater than 16 GB).

While challenges exist, the potential benefits for developers and the broader cloud ecosystem are immense. At this point in time, setting up GPUs on BOSH requires careful consideration of various technical aspects.

With its enormous community, looking ahead we can expect further progress when it comes to applying Cloud Foundry technologies for AI.