How is Cloud Foundry Transitioning from Consul?

Changing Diego’s Architecture

In Part I of this series, we discussed how BOSH has problems managing Consul as a stateful clustered service. In this post, we’ll go over how Cloud Foundry, and particularly its Diego runtime, is taking steps towards removing Consul and utilizing a different approach to provide high availability, service discovery and distributed locking.

The primary components in Diego that utilize Consul are the Bulletin Board System (BBS), the Auctioneer, the Rep and the Route-Emitters. For details of how these components use Consul, see part 1 of this series. Each component requires a different strategies for this transition. In what follows, we’ll go over how each of the above Diego components will implement discovery, distributed locking and high availability.

1. BBS and Auctioneer Locks

As discussed before, Consul solves the problem of having active-passive high availability for Diego components by allowing leader election through a distributed consensus and locking mechanism implemented on top of it. In the absence of Consul, Diego moved the implementation of the locking mechanism to a relational database.

Essentially, acquiring the lock in the BBS changes to a database transaction in which the BBS instance puts its identity into the specified lock record in the database and is deemed to be its owner — hence the master instance.

The master instance also submits a TTL at the time of updating the database record, and continuously refreshes its TTL for as long as it is still the leader. There is a global threshold for the validity of the TTL record in the database and the passive instances constantly check the lock record in the database to see whether the specified TTL has expired.

For as long as the TTL is valid, the passive instances remain in the passive mode. However, as soon as the TTL is expired (e.g., due to a failure of some type in the master BBS instance), the passive instances compete again to acquire the lock and become the active BBS.

2. Route-Emitter Locks

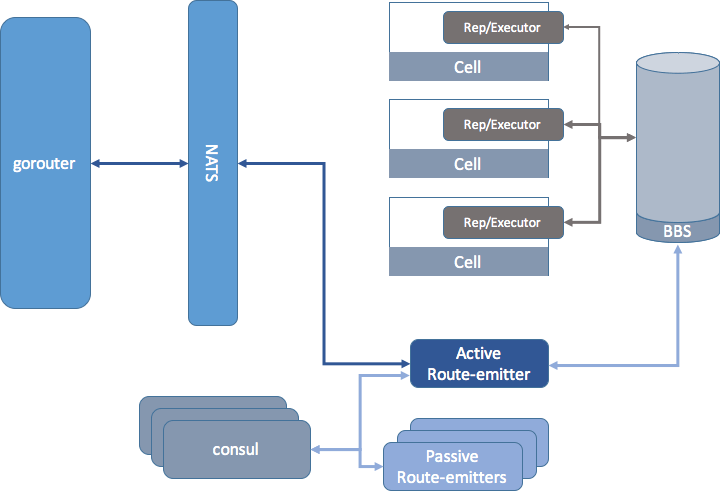

Unlike the BBS and the Auctioneers, Diego follows a different pattern for removing the lock from its Route-Emitters. The Consul-dependent architecture for Diego assumed only one active instance of the Route-Emitter to be responsible for receiving all routing information from cells running applications. In this single-instance architecture, Consul played a significant role in both implementing the active-passive locking mechanism and providing discoverability for the active Route-Emitter instance. The figure below shows how a global route-emitter used Consul within a Diego deployment.

In a re-architecturing of Diego, Route-Emitters were modified to be deployed on each Diego Cell separately and store only the given Cell’s routing information. Updating routes in the Route-Emitters happens through events sent from the BBS based on changes in the status of the applications on each Cell as perceived by the BBS or the Cell Reps. The Figure below illustrates the redesigning of Diego with local Route-Emitters.

As such, in the new architecture, the BBS is responsible for relaying these messages to each Route-Emitter separately. In the case of the single instance Route-Emitter, this was done by sending all traffic from the BBS to the active Route-Emitter where the active Route-Emitter would sync the status of the routes between the BBS and the GoRouter in Cloud Foundry.

However, in the case of Cell-local Route-Emitters, the BBS needs to dispatch the event messages to all Route-Emitters which may potentially result in multiplying the amount of traffic in the network. However, this can be alleviated by filtering the BBS events so that each Route-Emitter can potentially receive routing events related to the Cell it is placed on. Furthermore, each local Route-Emitter needs to go through the list of active long running processes (LRPs) and only extract the information of the LRPs relevant to the Cell it is managing before syncing back with the GoRouter on how the routes to the LRPs need to be updated.

One inherent benefit to the new architecture is that the local Route-Emitters are more resilient to failures and the overall system only fails gracefully. If a local Route-Emitter fails, only the LRPs local to its Cell will be unreachable. With enough replication at the application level, the application as a whole will still remain functional by forwarding traffic to its other instances residing on other healthy Cells.

3. Cell Presences

Diego Cells relied on Consul to maintain their presences. Cell presences are mechanisms used to notify the BBS that the Cell is running and also to allow for the Auctioneer to assign new jobs to the Cells based on their available resource capacities. As with other components in Diego, in the absence of Consul, Cells would also fail to set their presence into its persistence storage. Compared to the case of BBS and Route-Emitters, losing Cell presences is a less severe issue mostly because when Consul is gone, no new job will be passed to the Cells and also once the Consul is up, Cells will reset their presences.

Cell presences are also moved to the relational database. Similar to how locks are managed, Cells also send a TTL along with their presences when refreshing their state in the database. A Cell whose TTL is past due is considered gone and jobs allocated to it are potentially assigned to other components in the system. The Auctioneer and BBS also rely on the existence of the Cell presence information in the database in order for them to be able to allocate new jobs to the database and determine the healthiness and availability of the Cells

4. Service Discovery

Clients of a service typically want to have a single address to talk to, and let DNS handle balancing requests among service instances rather than having to manually manage a list of server addresses. These problems can be solved by supporting wildcard DNS.

Currently, Cloud Foundry provides an internal wildcard DNS through Consul. Consul is a strongly consistent service discovery platform that, as discussed, heavily relies on the RAFT algorithm to form a quorum. Our challenge is that we could end up with a massive down event if quorum was lost in the Consul cluster. This is caused by the key value store in the Consul cluster no longer being able to accept writes or permit reads, which in turn could bring down the service discovery platform.

Cloud Foundry is pursuing a new track of work to add service discovery to BOSH through a service referred to as BOSH-aware DNS server. One of the key differences and driving factors behind BOSH DNS is the fact that it will be an available service discovery platform as opposed to a strongly consistent service discovery, which is what Consul offers.

From our experience, we have determined the service discovery solution required by Cloud Foundry needs to be available by nature. A typical Cloud Foundry deployment does not typically have a high level of turbulence in topology, which means that service addresses do not change frequently. Therefore by replacing a consistent service discovery platform with an available one, we can ensure that service definitions are available in the event of a partial system outage.

The details of BOSH-aware DNS are out of the scope of this document. We plan to discuss the details of BOSH DNS in a separate blog post.

Lessons Learned

We want to outline a few lessons learned from this re-engineering effort as a means of reflecting on our experience. We extracted four high-level lessons learned, which we summarize below:

- Understand the constraints of your environment and tools when adopting new technologies. BOSH is extremely important to Cloud Foundry and helps provide much of its core values (rolling updates, canary deploys, cloud-agnostic deployment and so on). However, in doing so, BOSH imposes some constraints on Cloud Foundry components and it is important to understand these limitations and make sure that newly introduced components are compatible.

- It is important to learn and pay attention to how your current system is being used; and also realize which of the three CAP theorem trade-offs (Consistency, Availability and Partition Tolerance) you can play with when engineering new distributed systems solutions.

- Relational databases still have important roles to play in highly distributed systems. For instance, in our case, a relational database not only provided a great place for storing our system’s state, but it could also be used to provide distributed locking at the scale required for Cloud Foundry users.

While we cannot claim that these lessons are applicable to all cloud distributed systems, we urge you to pay attention to them as reminders when designing or evolving your own cloud distributed system.

Conclusion

Based on real world feedback, we concluded that Cloud Foundry’s service discovery needs can be solved using a different approach that improves the platform’s reliability. By using traditional relational databases, already architectural components of Cloud Foundry’s Diego layer, we reduced the number of software systems that had to be maintained for a Cloud Foundry deployment.

Also as discussed above, we realized that availability versus consistency requirements for a DNS service used in a Cloud Foundry deployment can better be addressed by BOSH, which internally has more information about the topology of the deployed Cloud Foundry.

This work is co-authored by Nima Kaviani (IBM) – Adrian Zankich (VMware) – Michael Maximilien (IBM).