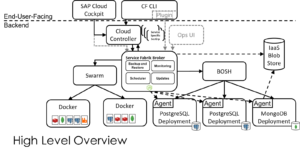

Introduction

Volume services have been available for Cloud Foundry for nearly two years, but the work of making secure configurations available took quite some time. In this article, we take a look at challenges we faced when attempting to use volume services with Cloud Foundry.

A second post will outline the various options customers have to achieve reasonable security. Stay tuned!

A Look at the NFSv3 Security Model

When thinking about how to securely open an NFS server to connections from Cloud Foundry, it is helpful to have a basic understanding of the security model for NFSv3, which remains the most commonly used protocol version.

NFS security is based on the standard POSIX file security model — the user/group/world permission set that normally governs who has access to read, write and execute a file. That POSIX security model is shared between a file server and client machines based on a trust relationship that is established from the IP address of the client machine. The file server keeps a table of the exports it has available, and for each export, it also keeps a CIDR range of the IP addresses allowed to connect to that export, plus some other configuration details we’ll get to in a moment. A line in the file export table normally looks something like this:

/export/vol1 10.0.0.0/8(rw,hide,secure,root_squash)

When a client connects to the file server, the server looks at the remote IP address and accepts or rejects it based on IP address alone. In the example above, any traffic coming from a “10.” address would be accepted. Once traffic is accepted by the file server, no individual user authentication is performed. In other words, once the server establishes the client has an acceptable IP address, the server automatically trusts that the client host has checks in place to ensure the user is entitled to the UID that’s being transmitted in the NFS RPC protocol.

The other configurations in the line above add a little bit of extra security. “Hide” means that the server does not advertise the share when queried. “Secure” means that the server only accepts mounts on port 111, and not port 1024, and therefore if the client happens to be a linux client, only privileged users are allowed to open the connection. “Root_squash” means that NFS traffic coming to the server with a UID of 0 will be treated as the anonymous user, not the root user.

But none of the above configurations really changes the basic security story. Once the file server has decided to trust a remote IP address, it is also trusting the authentication on that remote server. If a user has root access on that client host, then they can become any user they want, and effectively gain access to any file on the share. As a result, enterprises using NFS to connect to NAS must tightly constrain which IP addresses are allowed to connect to a share, and rely on network security to keep rogue clients from spoofing IP addresses and illicitly connecting.

Read also: Using Example Domains and IP Addresses for Safe Documentation

Challenges for Securing NFS in Cloud Foundry

On top of the already challenging (and arguably flawed) security model posed by NFSv3 for regular enterprises, Cloud Foundry adds some additional difficulties:

1. It is difficult to predict where an application will be placed within the available set of Diego cells, and once it has been placed, it is not possible to require it to stay on the same cell. As a result, NFS servers wishing to make shares available to applications in Cloud Foundry must use a CIDR range that includes any cell where the app could be placed. The effective result is that the NFS server cannot use IP security to trust a single application (as it typically would for applications running on a VM) but must instead trust all of Cloud Foundry.

2. Applications running within Cloud Foundry all run as user “vcap” or UID 1000 inside the container. The Garden container runtime uses a user namespace to map that user to a different UID outside the container, but that mapping is the same for every container, with the end result that all Cloud Foundry apps operate as UID 2000 in the Diego cell OS. (For rootless Garden, vcap maps to some other very high UID, but again it is the same UID across all applications.)

As a result, if we use standard Linux kernel mounts, traffic to the nfs server from any application in Cloud Foundry will appear to all come from the same user, meaning that we have no heterogeneous access control. The NFS server must trust all of Cloud Foundry, and Cloud Foundry runs all apps as the same user, so either all applications have access to a file on a share, or no applications do.

3. Containers running within a Diego cell are really just processes on that cell, and as a result, if container code opens a connection to an NFS server, it will look the same to the NFS server as legitimate volume mounts coming from the Diego cell OS. This means that even though the container lacks the privileges required to perform a real mount operation, there is little to stop an application from using the NFS RPC protocol to communicate with the server, at which point they can choose to include any UID they like in the data packet, and gain access that way. If the share is marked as “secure” then a normal buildpack app won’t be able to access it because the user lacks CAP_NET_BIND_SERVICE capability and cannot open traffic on port 111, but docker apps have no such limitations. (See the nfsspysh example from https://github.com/bonsaiviking/NfSpy for a client that can send NFS traffic without requiring a mount.)

4. Volume Services are exposed to the application developer as service brokers, which generate service bindings that will cause Diego to perform volume mounts. Customers might try to limit access to volume services by only enabling service access to the broker in specific spaces, but this will provide only very superficial security as any application developer can register a space scoped service broker — meaning a malicious user can push a service broker that adds volume mounts to its service binding.

5. Kerberos, the recommended method for properly securing NFS traffic, has proven difficult to implement in any environment, and near impossible to use in a cloud native platform such as Cloud Foundry. Because Kerberos context is heavily tied to the running user, maintaining distinct Kerberos identities for different applications from within the same server component is not possible.

This blog post has outlined the principle challenges faced in making volume services secure. In our next post, we will guide you through the available options for adding that much-needed security back in.